- Solutions

- Products

- Community

- Resources

- Company

Create incredible candidate experiences that communicate your brand, mission, and values with recruitment marketing solutions.

Learn moreCommunicate effectively and efficiently with the candidates that can drive your business forward.

Learn moreSelect the right candidates to drive your business forward and simplify how you build winning, diverse teams.

Learn moreHelp your best internal talent connect to better opportunities and see new potential across your entire organisation.

Learn moreCommunicate collectively with large groups of candidates and effectively tackle surges in hiring capacity.

Learn moreAccess tools that help your team create a more inclusive culture and propel your DEI program forward.

Learn moreRebound and respond to the new normal of retail with hiring systems that are agile enough to help you forge ahead.

Learn moreAccelerate the hiring of key talent to deliver point of care and support services that meet and exceed your promise of patient satisfaction.

Learn moreAttract and engage candidates with technical competencies, accelerate hiring for much-needed skills, and advance expertise within your valued workforce.

Learn moreSimplify how you recruit finance, insurance, and banking candidates with a unified platform built to match top talent with hard-to-fill roles.

Learn moreYour business strategy depends on your people strategy. Keep both in lockstep with the iCIMS Talent Cloud.

Learn moreBuild an engaging, high-converting talent pipeline that moves your business forward.

Learn moreDeliver the innovation your talent team needs, along with the global scale and security you demand.

Learn moreDeliver tailored technology experiences that delight users and power your talent transformation with the iCIMS Talent Cloud.

Learn moreThe #1 ATS in market share, our cloud-based recruiting software is built for both commercial and large, global employers.

Learn more Talk to salesAttract the best talent for your business with powerful, on-brand career websites that excite candidates and drive engagement.

Learn more Talk to salesCombine behavior-based marketing automation with AI insights to build talent pipelines, engage candidates with multi-channel marketing campaigns, and automatically surface the right talent for the job.

Learn more Talk to salesEmpower candidates with automated self-service, qualification screening, and interview scheduling through an AI-enabled digital assistant.

Learn more Talk to salesSimplify employee onboarding with automated processes that maximize engagement and accelerate productivity.

Learn more Talk to salesRecruit in the modern world and expand your reach with built-in virtual interviewing.

Learn more Talk to SalesFocus on qualifying candidates faster with fully integrated language assessments.

Learn more Talk to SalesImprove employee experience, retention, and reduce internal talent mobility friction with the iCIMS Opportunity Marketplace.

Learn more Talk to salesCompliment your sourcing and engagement efforts with sophisticated lead scoring and advanced campaign personalization.

Learn more Talk to salesModernize, streamline, and accelerate your communication with candidates and employees.

Learn more Talk to salesTransform the talent experience by showcasing your authentic employer brand through employee-generated video testimonials.

Learn more Talk to salesGive your business a competitive edge with a complete solution for creating personalized, timely, and accurate digital offer letters that inspire candidates to want to join your team.

Learn more Talk to SalesSimplify recruiting, dynamically engage talent, and reduce hiring bias with job matching and recruiting chatbot technology.

Learn more Talk to salesThe #1 ATS in market share, our cloud-based recruiting software is built for both commercial and large, global employers.

Learn more Talk to salesAttract the best talent for your business with powerful, on-brand career websites that excite candidates and drive engagement.

Learn more Talk to salesCombine behavior-based marketing automation with AI insights to build talent pipelines, engage candidates with multi-channel marketing campaigns, and automatically surface the right talent for the job.

Learn more Talk to salesEmpower candidates with automated self-service, qualification screening, and interview scheduling through an AI-enabled digital assistant.

Learn more Talk to salesSimplify employee onboarding with automated processes that maximize engagement and accelerate productivity.

Learn more Talk to salesCompliment your sourcing and engagement efforts with award-winning lead scoring and advanced campaign personalization.

Learn moreImprove employee experience, retention, and reduce internal talent mobility friction with the iCIMS Opportunity Marketplace.

Learn more Talk to salesModernise, streamline, and accelerate your communication with candidates and personnel.

Learn more Talk to salesTransform the talent experience by showcasing your authentic employer brand through employee-generated video testimonials.

Learn more Talk to salesSimplify recruiting, dynamically engage talent, and reduce hiring bias with job matching and recruiting chatbot technology.

Learn more Talk to salesHow PRMG attracts 50% more applicants for niche finance roles with the iCIMS Talent Cloud.

Learn moreThousands strong, our global community of talent professionals includes creatives, innovators, visionaries, and experts.

Learn moreTogether we’re creating the world’s largest ecosystem of integrated recruiting technologies.

Learn morePartner with our global professional services team to develop a winning strategy, build your team and manage change.

Learn moreExplore our network of more than 300 certified, trusted third-party service and advisory partners.

Learn moreExpert guidance about recruitment solutions, changes in the industry, and the future of talent.

Learn moreExpert guidance about recruitment solutions, changes in the industry, and the future of talent.

Learn moreStay up to date with the latest terminology and verbiage in the HR software ecosystem.

Learn morePartner with iCIMS to build the right strategies, processes, and experience to build a winning workforce.

Learn moreThe iCIMS Talent Cloud delivers a secure, agile, and compliant platform designed to empower talent teams, job seekers, and partners with advanced data protection and privacy.

Learn moreWatch the recording of our latest webinar looking at key findings from a recent study and explore short-term and long-term solutions for the talent crisis in 2022 and beyond.

Watch on-demandView press releases, media coverage, and the latest hiring data. See what analysts are saying about iCIMS.

Learn moreiCIMS is the Talent Cloud company that empowers organizations to attract, engage, hire, and advance the talent that builds a winning workforce.

Learn moreGet to know the award-winning leadership team shaping the future of the recruiting software industry.

Learn moreWe believe the future of work isn't something that "happens" to you. It's something you create. We actively create the future of work with our customers every day.

Learn moreStreamline your tech stack and take advantage of a better user experience and stronger data governance with ADP and the iCIMS Talent Cloud.

Learn moreThe combined power of iCIMS and Infor helps organizations strategically align their business and talent objectives.

Learn moreOur award-winning partnership with Microsoft is grounded in a shared desire to transform the workplace and the hiring team experience.

Learn moreOur partnership with Ultimate Kronos Group (UKG) supports the entire talent lifecycle by bringing frictionless recruiting solutions to UKG Pro Onboarding.

Learn moreLet’s get in touch. Reach out to learn more about iCIMS products and services.

Learn more

Editor’s note: Alina Zhiltsova is an NLP Data Scientist on the iCIMS Talent Cloud AI team.

When it comes to AI, HR professionals have a list of concerns and questions about how it works, whether or not it’s ethical, and how reliable it is. In turn, many may be missing out on using this powerful technology to their advantage. In fact, according to ZDNet, almost 70% of C-level leaders don’t know how AI model decisions or predictions are made. Plus, only 35% said their organization uses AI in a way that’s transparent and accountable. These challenges are real.

However, implementing ethical AI tools in your recruiting and hiring processes, with buy-in from all levels of your organization, can help to reduce bias and improve your diversity, equity, and inclusion (DEI) outcomes.

At iCIMS, we evolve our AI-enabled recruiting software according to best practices and global regulations so you can cut through the confusion and feel confident you are recruiting more ethically to build your winning workforce. Our AI teams follow initiatives spelled out by industry leaders, such as the Responsible AI Institute (formerly AI Global), which is an organization that continually assesses all major responsible AI initiatives worldwide to create a framework called the Responsible AI Trust Index.

You can count on AI in iCIMS to uphold these best practices so you can use these tools to help reduce bias and meet your DEI goals—all while explaining how it works.

You can consider an algorithm fair when each candidate is assessed solely based on their skills and experience. AI-enabled tools will surface and prioritize two CVs submitted by candidates with similar skills and experience, regardless of demographic information. An unfair algorithm, on the other hand, will give higher chances to one of the candidates based on gender, race, or ethnicity because historically that gender was more represented in a particular role.

Algorithmic bias is difficult to identify in deep learning because it is not clear at what stage the bias is introduced. For this reason, most vendors have a difficult time showing how their AI/ML algorithms make decisions; in other words, the algorithms don’t show their work. Since your recruiting teams can’t fix what they can’t see, it’s iCIMS’ priority to be transparent with our AI and consistently measure the fairness of the algorithms we use in our products. Our team constantly tests our models to make sure they are fair.

To understand how our data scientists create ethical AI technology, it’s important to highlight that a lot of our models use word vectors. Word vectors are the way to represent words as numbers on a vector space, which makes it possible to perform predictions and calculations on text documents. They can be a common source of biases in natural language processing, which deals with texts such as resumes, CVs, and cover letters.

The easiest way to understand word vectors is to imagine a map. On each map, you can find the names of cities; some are closer physically than others. For example, the geographic coordinates of Glasgow and Stuttgart are closer together than Glasgow and New York. In a word vector space, Glasgow and Stuttgart would be correlated more closely than either is to New York.

Like coordinates on a map, word vectors are numeric representations for common words people use to communicate daily. Just like cities on a map, word vectors will have different distances between them on a vector space. Words that are closer in meaning will also be closer to each other on the vector space.

For example, “kitten” will be closer to “puppy” and “cat” than to “constellation.” A machine learning algorithm will determine how related those words are. If one vector moves, all the vectors adjust their position in the vector; one tiny change can set off a domino effect.

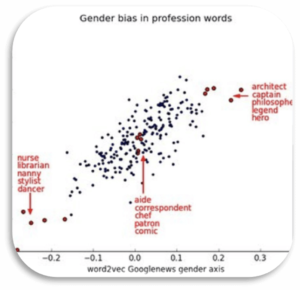

The same principles can be applied to recruitment AI. Depending on the modification, an algorithm could reduce or increase bias. For example, in the word vector image below, you’ll notice nurse, librarian, nanny, stylist, and dancer all share a close association – all jobs society historically associated with women. So, unless taught better, an algorithm might use that historical data to predict that women will make better nurses or librarians, regardless of skill.

At iCIMS, we put a lot of effort into pre-processing and post-processing of our models to reduce the possibility of bias. One of the ways to do this is to adjust the distance between word vectors using industry best practices. By doing so, we ensure that the hundreds of thousands of words we have in our vector space will not influence the algorithm unfairly against someone’s gender, race, ethnicity, or other identifiers of demographic data.

iCIMS teams consistently measure bias and research bias mitigation—using the method discussed above—and we are always on the lookout for the latest updates from state-of-the-art research, such as those from Responsible AI Institute.

To provide employers with more confidence in their hiring technology, iCIMS helps promote DEI using responsible AI across the iCIMS Talent Cloud, and how it works remains transparent. This way, you know how you are using your AI-powered recruitment technology and that it’s being done ethically.

Now that you know how AI can reduce bias in your recruiting process, you can more easily present to your leadership team the value of using AI responsibly and contribute to building a more equitable workforce.